Amazon Web Services has intensified its push into the artificial intelligence chip market with the launch of Trainium3, marking the latest effort by cloud providers to reduce their dependence on Nvidia’s dominant GPU technology while controlling costs and improving efficiency.

The announcement, made at AWS’s annual re:Invent conference in Las Vegas on Tuesday, comes as the AI chip market experiences unprecedented competition, with multiple technology giants developing custom silicon to power increasingly expensive AI workloads.

Amazon’s Trainium3: Specifications and Market Positioning

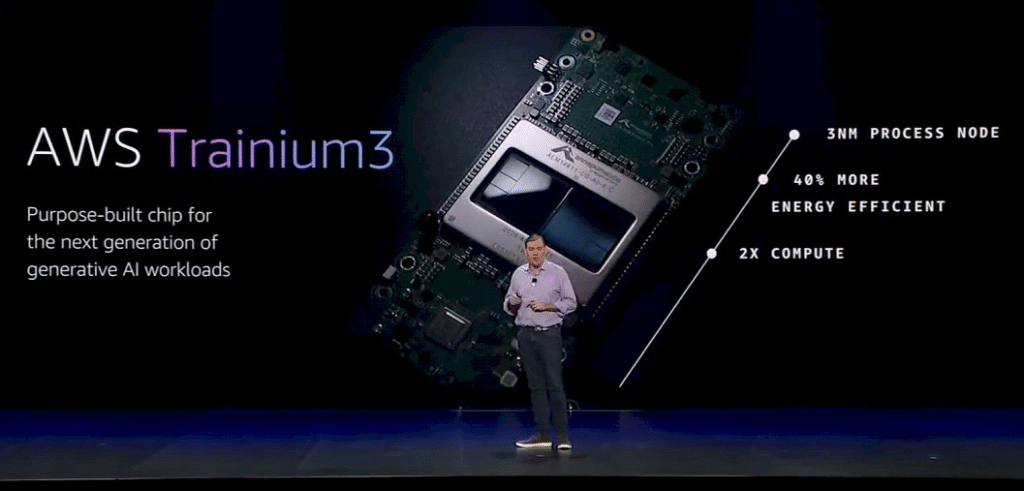

According to AWS Vice President Dave Brown, Trainium3 was recently installed in select data centers and became available to customers on Tuesday. The third-generation chip and system offer significant performance improvements for AI training and inference, with AWS claiming the system is more than four times faster with four times more memory compared to the previous generation.

AWS CEO Matt Garman stated that “Trainium3 offers the industry’s best price performance for large scale AI training and inference,” positioning the chip as capable of reducing the cost of training and operating AI models by up to 50 percent compared with systems using equivalent GPUs, mainly from Nvidia.

The technical specifications reveal substantial improvements in scalability and efficiency. Thousands of UltraServers can be linked together to provide applications with up to one million Trainium3 chips—ten times the previous generation capacity. Each UltraServer can host 144 chips.

Energy Efficiency as Competitive Advantage

Energy consumption has emerged as a critical factor in AI infrastructure development. AWS reports the chips and systems are 40% more energy efficient than the previous generation, addressing growing concerns about the AI industry’s power requirements and data center operators’ operational costs.

The Strategic Context: Cloud Providers vs. Nvidia Dependence

Bloomberg Intelligence analyst Mandeep Singh, speaking on Bloomberg Television, provided context for Amazon’s chip development strategy and its implications for the broader AI market.

Singh explained that Amazon, as the largest cloud provider with approximately 50% market share, has historically relied on Nvidia GPUs for AI training workloads—with Google being the notable exception through its custom TPU (Tensor Processing Unit) development.

“What Amazon is doing is really copying that Google playbook where they want to use their own chips,” Singh stated in the Bloomberg interview. “It’s just that they haven’t had that kind of success that Google has had, and they’re trying to speed things up because in the end, all these hyperscalers don’t want to spend 20 to 25% of their CapEx on procuring Nvidia’s chips.”

This capital expenditure concern represents a fundamental business challenge for cloud providers. As AI workloads expand, the cost of purchasing Nvidia’s premium GPUs—which currently dominate the training and inference market—consumes an increasingly large portion of infrastructure budgets.

Google’s Head Start in Custom Silicon

Google’s success with its TPU architecture has provided a model for other cloud providers to follow. Google released its latest AI model last month that was trained using the company’s own in-house chips, not Nvidia’s, demonstrating the viability of custom silicon for cutting-edge AI development.

According to reporting from Yahoo Finance, Google caused tremors in the industry when it was reported that Facebook-parent Meta would employ Google AI chips in data centers, signaling new competition for Nvidia.

Singh emphasized this competitive dynamic: “I think Google so far is ahead in that. But clearly Amazon is trying to catch up when it comes to their own chips efforts.”

Current Adoption and Customer Base

Amazon’s custom chip strategy has already gained significant traction among major AI companies.

AWS CEO Andy Jassy revealed that Trainium already represents a multibillion-dollar business, indicating substantial adoption despite the relatively recent introduction of the technology.

AWS customers like Anthropic (of which Amazon is also an investor), Japan’s LLM Karakuri, SplashMusic, and Decart have already been using the third-generation chip and system and significantly cut their inference costs, according to Amazon’s announcement.

The Anthropic partnership represents a particularly significant validation of Amazon’s chip technology. According to TechCrunch reporting, in October, CNBC conducted the first on-camera tour of Amazon’s biggest AI data center in Indiana, where Anthropic is training its models on half a million Trainium2 chips.

Apple’s Evaluation of Trainium Technology

In a notable development, Apple revealed it is currently using Amazon Web Services’ custom AI chips for services like search and is evaluating the Trainium 2 chip for pretraining its proprietary AI models, including Apple Intelligence.

At the annual AWS Reinvent conference, Benoit Dupin, Apple’s senior director of machine learning and AI, noted that Amazon’s Inferentia chips have delivered a 40% efficiency gain for search services, and early evaluations suggest that the Trainium 2 chip could offer up to a 50% improvement in efficiency during pretraining.

This collaboration between two technology giants underscores the credibility Amazon has built in the custom AI chip market.

Trainium4: Strategic Nvidia Compatibility

Perhaps the most strategically significant announcement from AWS re:Invent was the preview of Trainium4, currently in development.

AWS promised the chip will provide another significant step up in performance and support Nvidia’s NVLink Fusion high-speed chip interconnect technology. This means the AWS Trainium4-powered systems will be able to interoperate and extend their performance with Nvidia GPUs while still using Amazon’s homegrown, lower-cost server rack technology.

Amazon said it’s working on developing its next-generation Trainium4 AI chips that will be designed for compatibility with Nvidia’s networking technology, NVLink Fusion, the coveted tech that connects chips within AI server racks.

This interoperability strategy addresses a critical barrier to adoption. It’s worth noting that Nvidia’s CUDA (Compute Unified Device Architecture) has become the de facto standard that all the major AI apps are built to support. The Trainium4-powered systems may make it easier to woo big AI apps built with Nvidia GPUs in mind to Amazon’s cloud.

The Broader Competitive Landscape

Amazon’s chip development occurs within a rapidly evolving competitive environment where multiple technology companies are pursuing custom silicon strategies.

Microsoft’s Custom Chip Efforts

Microsoft is aiming to eventually rely on its own custom chips rather than Nvidia’s, though it has faced delays in developing in-house silicon, according to Yahoo Finance reporting.

Nvidia’s Market Position

Despite increasing competition from custom chips, Nvidia maintains overwhelming market dominance. Nvidia currently dominates with an estimated 80- to 90-percent market share for products used in training large language models that power the likes of ChatGPT.

According to reporting cited by SmBom, Nvidia’s AI data center chip sales reached $26.3 billion in Q2 of FY 2024, matching AWS’s entire reported revenue for the same period, illustrating the scale of Nvidia’s AI business.

Nvidia puzzled industry observers when it responded to Google’s successes in an unusual post on X, saying the company was “delighted” by the competition before adding that Nvidia “is a generation ahead of the industry”.

The Custom ASIC Trend

The shift toward custom application-specific integrated circuits (ASICs) represents a broader industry trend. Daniel Newman of the Futurum Group stated that he sees custom ASICs “growing even faster than the GPU market over the next few years”.

Investment and Capital Expenditure Implications

The development of custom AI chips occurs against a backdrop of massive infrastructure investments by cloud providers.

Singh explained the capital expenditure dynamics in the Bloomberg interview: “When you’re running a cloud business, you’re trying to optimize things across the stack. And that’s where Meta is very different from a Google or an Amazon which have a big public cloud business.”

He indicated that capital expenditure efficiency will become increasingly important: “I think what you are going to see is focus more on CapEx efficiency. If Google can deliver a lot more of their $90 billion in CapEx in terms of training and inference and having a cloud business, everyone will be measured the same way, whether it’s Amazon or Meta, and that’s their CapEx efficiency will be a much bigger focus in 2026 than it was in the past two years where there was a gold rush going on in terms of getting these GPUs.”

Apple’s AI Strategy and Internal Challenges

The Bloomberg interview also addressed Apple’s position in the AI landscape, which Singh characterized as lagging behind other major technology companies.

“Apple, when you think about the major players, has trailed in terms of having an AI strategy, making those investments,” Singh stated. “And right now, we are seeing even just yesterday, DeepSeek released their latest model, ByteDance is talking about a model that can be run on your operating system.”

He explained the challenges Apple faces: “There is so much going on at the hardware and at the operating system layer that you feel like Apple is missing out, one because they don’t have any AI models of their own. And also in terms of their partnerships, they haven’t been that upfront about whether it’s OpenAI or Google in terms of making changes to their operating system.”

Singh warned of potential long-term consequences: “Even though the hardware sales haven’t really suffered because of that, when you look two years out, if Apple doesn’t have a good AI strategy or a good model that works natively on the operating system, I think you will start to see an impact on the hardware sales.”

This analysis suggests Apple’s cautious approach to AI—noted previously in the OpenAI code red article where technology journalist Jacob Ward described Apple as strategically waiting—may carry risks if the company cannot develop or integrate competitive AI capabilities.

Technical Challenges and Market Realities

Despite Amazon’s significant progress, technical and market challenges remain.

Performance Comparison Questions

According to SmBom, Amazon refrains from directly matching its chips against Nvidia’s and does not submit its chips for independent benchmarking. This lack of third-party validation makes direct performance comparisons difficult.

Patrick Moorhead, a chip analyst at Moor Insights & Strategy, believes Amazon’s claims of a fourfold performance improvement between Trainium 1 and Trainium 2 are credible, although he suggests that offering customers more options may be more important than raw performance metrics.

Software Ecosystem Considerations

The software ecosystem represents another significant challenge for custom chip adoption. Nvidia’s CUDA platform has become the standard development environment for AI applications, creating substantial switching costs for companies considering alternative hardware.

According to Technology Magazine, Amazon is developing AWS Neuron, an AI-model-development platform seen as a direct challenge to Nvidia’s established software ecosystem, especially the CUDA platform, which plays a critical role in supporting AI developers.

However, convincing major players to make the switch from Nvidia is a complicated battle, due to entrenched preferences and perceived risks linked with transitioning to a new hardware system.

Industry Outlook and Future Competition

The Bloomberg interview concluded with Singh’s assessment of the competitive landscape heading into 2026.

“I think what you are going to see is focus more on CapEx efficiency,” Singh reiterated. “CapEx efficiency will be a much bigger focus in 2026 than it was in the past two years where there was a gold rush going on in terms of getting these GPUs, making sure you have chips for training our models. I think we are moving into a phase where CapEx efficiency will be front and center going forward.”

This shift from a supply-constrained “gold rush” mentality to efficiency-focused competition suggests the AI infrastructure market is maturing, with custom chips potentially playing an increasingly important role.

Market Position Heading into 2026

When asked about winners in the AI arms race for 2026, Singh emphasized the importance of cloud business models: “When you’re running a cloud business, you’re trying to optimize things across the stack. And that’s where Meta is very different from a Google or an Amazon which have a big public cloud business.”

The implication is that companies with large public cloud operations—particularly AWS and Google Cloud—have structural advantages in developing and deploying custom AI chips, as they can amortize development costs across broad customer bases while improving their own margins.

Frequently Asked Questions

What is Amazon Trainium3 and how does it differ from previous versions?

Trainium3 is Amazon’s third-generation custom AI chip designed for training and inference workloads. According to AWS, it offers four times the performance and four times more memory compared to Trainium2, while being 40% more energy efficient. The chip is built on 3-nanometer technology and can be deployed in systems connecting up to one million chips.

Why are cloud providers developing their own AI chips?

According to Bloomberg analyst Mandeep Singh, cloud providers want to reduce their dependence on Nvidia GPUs, which currently consume 20-25% of their capital expenditures. Custom chips offer potential cost savings of 40-50% compared to Nvidia GPUs while providing greater control over their technology stack and supply chain.

How does Amazon’s chip strategy compare to Google’s?

Google is currently ahead in custom AI chip development, according to Singh. Google’s TPU (Tensor Processing Unit) architecture has been successfully used to train Google’s latest AI models without Nvidia hardware. Amazon is “copying that Google playbook” but is still catching up in terms of proven success and market adoption.

What is Trainium4 and why does Nvidia compatibility matter?

Trainium4 is Amazon’s upcoming fourth-generation chip, currently in development. Its key feature is compatibility with Nvidia’s NVLink Fusion interconnect technology, allowing Trainium4 systems to work alongside Nvidia GPUs. This interoperability addresses a major barrier to adoption, as most AI applications are built for Nvidia’s CUDA platform.

Which major companies are using Amazon’s Trainium chips?

According to AWS announcements, Anthropic is training AI models on half a million Trainium2 chips in Amazon’s Indiana data center. Other customers include Karakuri, SplashMusic, and Decart. Apple is using Amazon’s Inferentia chips for search services and evaluating Trainium2 for pretraining Apple Intelligence models.

Can Amazon’s chips actually compete with Nvidia’s performance?

Amazon has not submitted its chips for independent benchmarking and avoids direct performance comparisons with Nvidia. However, chip analyst Patrick Moorhead considers Amazon’s claimed fourfold performance improvement between generations credible. AWS emphasizes price-performance rather than raw performance, claiming up to 50% cost savings compared to equivalent Nvidia systems.

What role does energy efficiency play in AI chip competition?

Energy efficiency has become critical as AI workloads consume enormous amounts of power. Trainium3’s 40% improvement in energy efficiency addresses both operational costs for data centers and growing concerns about the AI industry’s environmental impact. Energy consumption is now a major factor in total cost of ownership calculations.

How much revenue does Amazon’s chip business generate?

According to AWS CEO Andy Jassy, Trainium already represents a “multibillion-dollar business” that continues to grow rapidly. However, Amazon has not disclosed specific revenue figures. This represents a small fraction of AWS’s overall business, which generated approximately $26 billion in revenue in Q2 2024.

What challenges does Amazon face in competing with Nvidia?

Major challenges include Nvidia’s entrenched software ecosystem (CUDA), the lack of independent performance benchmarks for Amazon’s chips, the need to convince customers to migrate away from proven Nvidia-based systems, and Nvidia’s continued innovation. Additionally, most AI frameworks and applications are optimized for Nvidia hardware.

What does this mean for the future of AI infrastructure?

According to Mandeep Singh, the market is shifting from a “gold rush” focused on acquiring any available GPUs to a more mature phase emphasizing capital expenditure efficiency. Custom chips from cloud providers are expected to grow faster than the overall GPU market, though Nvidia will likely maintain dominant market share for the foreseeable future.

Conclusion

Amazon’s acceleration of its Trainium chip development represents a significant strategic effort to reduce dependence on Nvidia’s dominant position in AI infrastructure while improving cost efficiency for both AWS and its customers.

The launch of Trainium3, with its substantial performance improvements and energy efficiency gains, demonstrates meaningful progress in Amazon’s custom silicon efforts. The preview of Trainium4 with Nvidia compatibility signals a pragmatic approach that acknowledges both the value of interoperability and the entrenched position of Nvidia’s ecosystem.

However, as Mandeep Singh’s analysis indicates, Google maintains a lead in custom chip success, and Amazon continues to work toward matching that achievement. The shift toward capital expenditure efficiency as a key competitive metric suggests that 2026 will see intensified focus on the economic returns from massive AI infrastructure investments.

For the broader AI industry, the competition among custom chips signals a maturing market where cost optimization and energy efficiency join raw performance as critical factors in hardware selection. While Nvidia’s market dominance appears secure in the near term, the growing adoption of custom chips by major cloud providers and AI companies suggests an increasingly diverse and competitive AI infrastructure landscape.

Sources

This article synthesizes reporting and analysis from:

- Bloomberg Television: Interview with Mandeep Singh, Bloomberg Intelligence analyst

- Bloomberg: Original reporting on Amazon’s Trainium3 launch

- TechCrunch: Technical specifications and analysis of Trainium3 and Trainium4

- CNBC: Coverage of custom AI chip development and data center tour

- Yahoo Finance: Market analysis and competitive landscape

- Technology Magazine: In-depth analysis of Amazon’s chip strategy

- SmBom: Financial and technical details of Trainium development

For video analysis: Bloomberg’s coverage of Amazon AI chip strategy